Blockchain Technology and its Role in Reshaping AI's Data Challenges

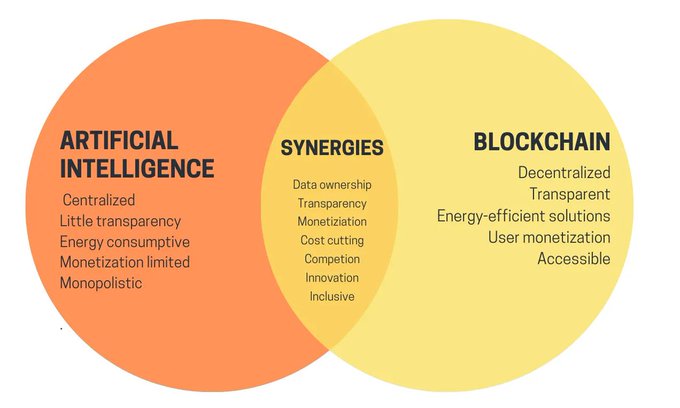

The synergy between artificial intelligence (AI) and blockchain technology is undeniable. In this installment of my AI x Blockchain series, we delve deep into Decentralized Data Networks (DDNs) and how blockchain assists in resolving the data concerns AI faces.

Understanding AI's Dependence on Data:

The lifeblood of AI is data. The better and more substantial the data, the more accurate AI predictions become. Common data sources for AI include web scraping, databases, platforms like OpenML and Amazon Datasets, and marketplaces such as Snowflake & Axciom. After procuring this data, tasks like cleaning, labeling, and storing are just as vital to ensure its quality and relevance.

Hurdles in the AI Data Landscape:

A few challenges stand out when it comes to AI and data:

- The growth rate of public data stocks isn't keeping up with the demand. Forecasts suggest that by 2026, we might run out of high-quality language data.

- Quality remains a significant concern, with 53% of the stakeholders indicating a lack of quality data volume as a barrier to AI's growth.

- The financial implications are also daunting. Organizations allocate nearly 90% of their time and 58% of their budgets just on data collection and preparation.

The Promise of Blockchain:

Blockchain technology offers solutions by facilitating the creation of permissionless markets for data. This makes it possible for individuals to contribute valuable data and get rewarded (through tokens) in return, bolstering the data economy. One prime example of this in action is the Ocean Protocol.

Delving into Ocean Protocol:

Ocean Protocol brings several key features to the table:

- Privacy is paramount. With its Compute-to-Data mechanism, AI models can be trained without violating data privacy norms, staying compliant with GDPR.

- The introduction of veOCEAN tokens offers incentives, allowing users to signal dataset quality and receive rewards.

- The protocol enables the minting of datasets as NFTs, ensuring clear ownership, transparent revenue sharing, and trustless interactions.

- It also ensures data provenance, which helps in maintaining transparency and thwarting deepfakes.

- Cost reductions are apparent as direct transactions between data owners and consumers eliminate middlemen.

Unpacking Ocean's Value:

Ocean Protocol addresses multiple challenges in the AI data ecosystem. It not only curtails costs associated with data acquisition and sharing but also guarantees user privacy and promotes high-quality datasets.

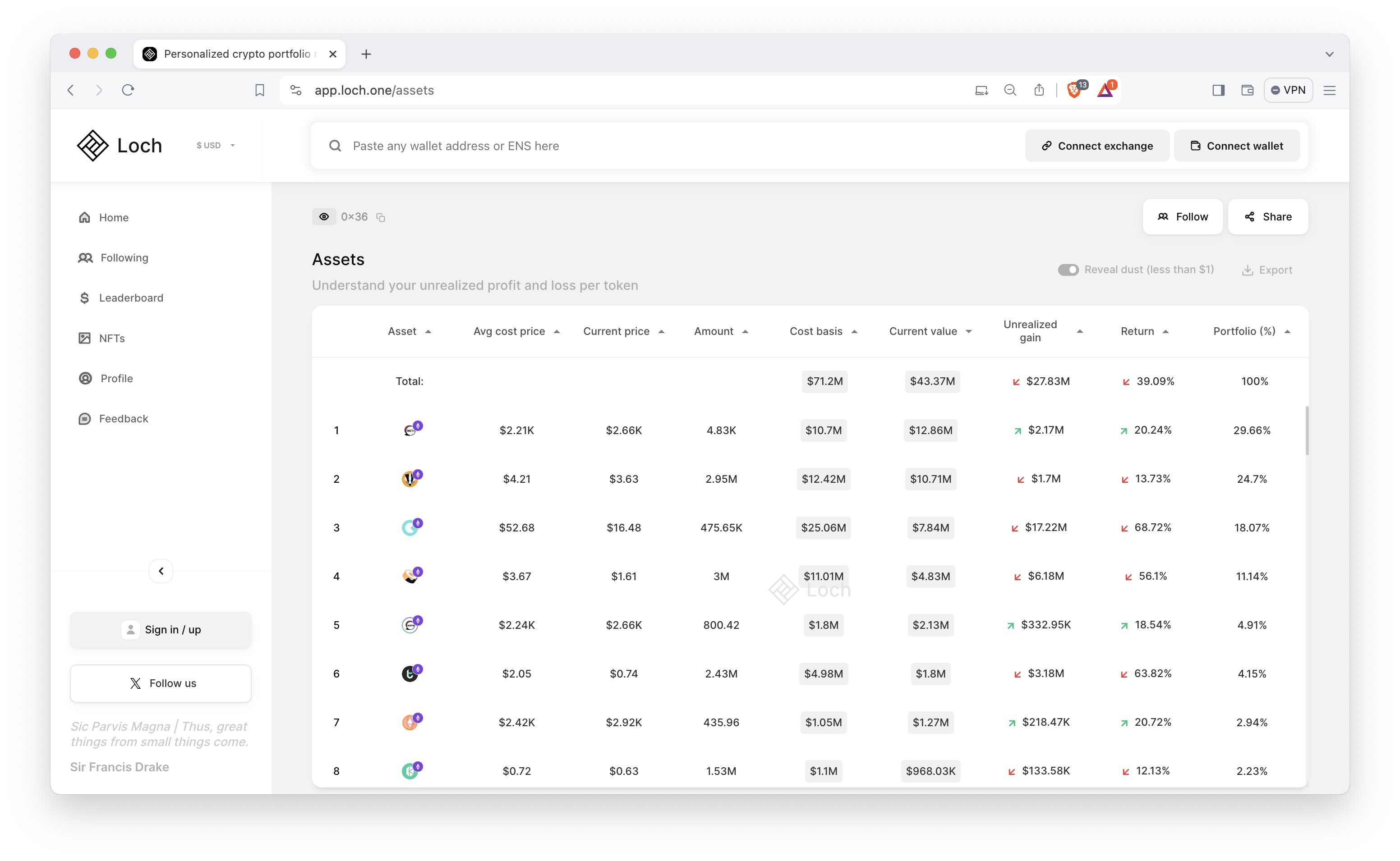

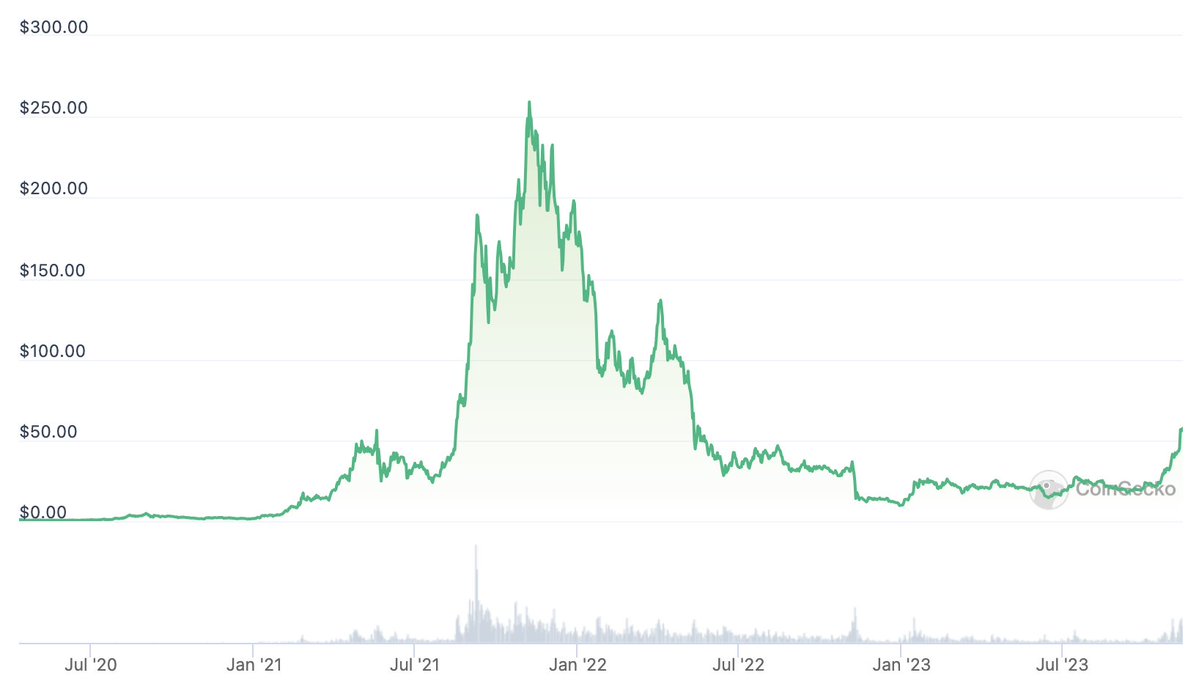

Ocean's Performance Metrics:

However, when examining the Dune dashboard, it's evident that Ocean has seen a decline in paid data transactions and datasets post the Q4 2020 hype. With under 5 transactions and datasets being generated every month, Ocean hasn't scaled as one might expect, especially considering the AI boom and the expected 8% CAGR of the data market.

Pondering the Underwhelming Performance:

Several factors might be contributing to Ocean's tepid performance.

On the supply side, we have:

- Data brokers already serving data owners, making blockchain's inclusion seem redundant.

- Potential concerns around privacy, with certain proprietary data owners possibly seeing no real incentive to share.

- Confidential computing isn't a unique proposition to decentralized platforms.

On the demand side, issues arise with:

- A curation mechanism that doesn't necessarily guarantee top-tier datasets and an over-reliance on centralized brokers' reputations.

- A glaring lack of standardization which can lead to inconsistencies, especially when dealing with varied data sources.

Despite these challenges, Ocean Protocol underscores an important takeaway: the core issue lies in execution, not the foundational idea.

Improving Ocean:

To truly harness Ocean's potential, certain measures can be undertaken:

- Augmenting incentives to catalyze the formation of data DAOs.

- Introducing mechanisms like slashing to handle subpar datasets.

- Enhancing the user experience, such as by introducing simpler payment methods like credit card onramps.

The Future of Data Ownership:

A critical consideration moving forward is re-evaluating our understanding of data ownership. The ideal scenario is one where data sharing is encouraged, ushering in a democratized data value system.

In conclusion, leveraging blockchain's unique properties can pave the way for a stronger data economy, ultimately propelling AI's continued evolution.

Blockchain Technology and its Role in Reshaping AI's Data Challenges

The synergy between artificial intelligence (AI) and blockchain technology is undeniable. In this installment of my AI x Blockchain series, we delve deep into Decentralized Data Networks (DDNs) and how blockchain assists in resolving the data concerns AI faces.

Understanding AI's Dependence on Data:

The lifeblood of AI is data. The better and more substantial the data, the more accurate AI predictions become. Common data sources for AI include web scraping, databases, platforms like OpenML and Amazon Datasets, and marketplaces such as Snowflake & Axciom. After procuring this data, tasks like cleaning, labeling, and storing are just as vital to ensure its quality and relevance.

Hurdles in the AI Data Landscape:

A few challenges stand out when it comes to AI and data:

- The growth rate of public data stocks isn't keeping up with the demand. Forecasts suggest that by 2026, we might run out of high-quality language data.

- Quality remains a significant concern, with 53% of the stakeholders indicating a lack of quality data volume as a barrier to AI's growth.

- The financial implications are also daunting. Organizations allocate nearly 90% of their time and 58% of their budgets just on data collection and preparation.

The Promise of Blockchain:

Blockchain technology offers solutions by facilitating the creation of permissionless markets for data. This makes it possible for individuals to contribute valuable data and get rewarded (through tokens) in return, bolstering the data economy. One prime example of this in action is the Ocean Protocol.

Delving into Ocean Protocol:

Ocean Protocol brings several key features to the table:

- Privacy is paramount. With its Compute-to-Data mechanism, AI models can be trained without violating data privacy norms, staying compliant with GDPR.

- The introduction of veOCEAN tokens offers incentives, allowing users to signal dataset quality and receive rewards.

- The protocol enables the minting of datasets as NFTs, ensuring clear ownership, transparent revenue sharing, and trustless interactions.

- It also ensures data provenance, which helps in maintaining transparency and thwarting deepfakes.

- Cost reductions are apparent as direct transactions between data owners and consumers eliminate middlemen.

Unpacking Ocean's Value:

Ocean Protocol addresses multiple challenges in the AI data ecosystem. It not only curtails costs associated with data acquisition and sharing but also guarantees user privacy and promotes high-quality datasets.

Ocean's Performance Metrics:

However, when examining the Dune dashboard, it's evident that Ocean has seen a decline in paid data transactions and datasets post the Q4 2020 hype. With under 5 transactions and datasets being generated every month, Ocean hasn't scaled as one might expect, especially considering the AI boom and the expected 8% CAGR of the data market.

Pondering the Underwhelming Performance:

Several factors might be contributing to Ocean's tepid performance.

On the supply side, we have:

- Data brokers already serving data owners, making blockchain's inclusion seem redundant.

- Potential concerns around privacy, with certain proprietary data owners possibly seeing no real incentive to share.

- Confidential computing isn't a unique proposition to decentralized platforms.

On the demand side, issues arise with:

- A curation mechanism that doesn't necessarily guarantee top-tier datasets and an over-reliance on centralized brokers' reputations.

- A glaring lack of standardization which can lead to inconsistencies, especially when dealing with varied data sources.

Despite these challenges, Ocean Protocol underscores an important takeaway: the core issue lies in execution, not the foundational idea.

Improving Ocean:

To truly harness Ocean's potential, certain measures can be undertaken:

- Augmenting incentives to catalyze the formation of data DAOs.

- Introducing mechanisms like slashing to handle subpar datasets.

- Enhancing the user experience, such as by introducing simpler payment methods like credit card onramps.

The Future of Data Ownership:

A critical consideration moving forward is re-evaluating our understanding of data ownership. The ideal scenario is one where data sharing is encouraged, ushering in a democratized data value system.

In conclusion, leveraging blockchain's unique properties can pave the way for a stronger data economy, ultimately propelling AI's continued evolution.

Continue reading

Continue reading

Blockchain Technology and its Role in Reshaping AI's Data Challenges

Sep 18, 2023

Blockchain Technology and its Role in Reshaping AI's Data Challenges

Sep 18, 2023